123: The Perils of Automated Justice

The Fallacy of AI-Based Policing

The Trump regime and its backers—figures like Peter Thiel and Elon Musk—are pushing for a world where artificial intelligence polices, and disciplines. In this vision, automated justice is not just a tool, but the foundation of a political order that seeks to control populations under the guise of efficiency and objectivity. AI-based policing, predictive crime analytics, and biometric surveillance are being integrated into law enforcement at an alarming rate, often with little oversight. But behind the sleek façade of these technologies lies a deeply flawed system, one that amplifies biases, enables systemic abuses, and further entrenches authoritarian power.

While these AI-driven justice systems claim to be neutral, reality tells a different story. Researchers and journalists are increasingly uncovering the disturbing ways law enforcement misuses these tools, bending them to their own biases, circumventing legal standards, and making irreversible mistakes. The promise of unbiased machine-driven law enforcement has quickly unraveled, exposing the dangerous myth of automated justice.

Consider the case of Robert Williams. In January 2020, Williams was arrested in front of his wife and children in Detroit after being falsely identified by facial recognition software. He spent hours in custody before authorities admitted their mistake, but the damage had already been done—humiliation, distress, and a permanent mark on his record. His case is not unique. Investigations show that AI-powered facial recognition disproportionately misidentifies Black and brown individuals, leading to wrongful arrests, police harassment, and shattered lives.

A recent Washington Post investigation details the growing number of people facing similar fates due to flawed AI systems. As police departments become more reliant on these technologies, they begin treating AI-generated "matches" as infallible evidence rather than what they really are—fallible, probabilistic guesses. This is especially dangerous when law enforcement officers already operate within a culture that assumes guilt before innocence.

Beyond facial recognition, predictive policing software is reinforcing systemic discrimination. These AI models analyze historical crime data to forecast where crimes are "likely" to occur, leading to over-policing of Black and low-income neighborhoods. But as many critics have pointed out, these tools don't predict crime; they predict where police will look for crime. Instead of preventing harm, AI policing ensures that already marginalized communities remain under constant surveillance and scrutiny, while wealthier, whiter areas escape the same scrutiny despite comparable rates of crime.

The Architects of Automated Oppression

Peter Thiel’s Palantir has embedded itself deeply into U.S. law enforcement and intelligence agencies, supplying tools that integrate massive datasets—from license plate readers to social media activity—to generate risk assessments on individuals. His belief in AI-driven governance aligns with his openly anti-democratic views. Thiel has long advocated for reducing public participation in governance and placing more trust in elite technocrats and machine-driven systems. Automated policing is the perfect tool for this vision: an unaccountable, opaque system that extends the power of law enforcement while diminishing public scrutiny.

Elon Musk, while more publicly associated with AI development through ventures like xAI, has also supported this shift toward AI-driven security. With investments in autonomous robotics, facial recognition, and predictive modeling, Musk sees a future where automation manages human behavior at scale. His ties to law enforcement contracts, particularly in border surveillance and drone technology, suggest that his companies are more than willing to supply the tools necessary for a techno-authoritarian state.

Enable 3rd party cookies or use another browser

Grassroots Resistance Against Automated Injustice

While billionaires push forward their AI-driven policing agenda, resistance is mounting from activists, journalists, and legal advocates who see the profound dangers in allowing machines to dictate justice.

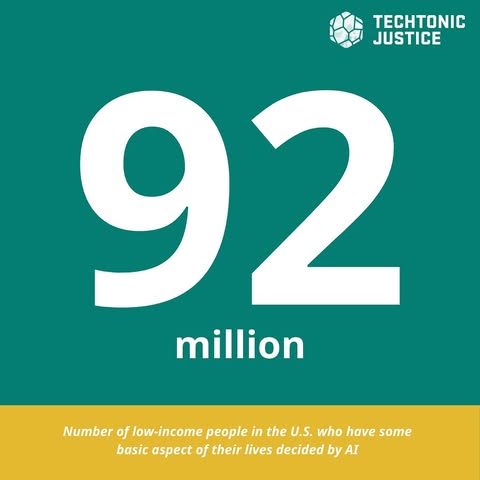

Organizations like TechTonic Justice are leading the fight against AI-driven policing. TechTonic Justice provides legal aid to individuals wrongfully targeted by automated systems, pressures governments to impose stricter regulations, and works to increase transparency in how AI tools are used in law enforcement. The group has successfully challenged wrongful arrests, helped secure bans on facial recognition in multiple cities, and continues to expose the hidden biases embedded in AI policing tools.

The EU’s Ban on Social Scoring

Unlike the United States, where AI-based policing is expanding with little resistance from policymakers, the European Union has taken a stand against the rise of automated justice. The EU’s recent ban on social scoring systems—where AI assigns "trustworthiness" scores to individuals based on their behavior—sends a clear message that such systems have no place in democratic societies.

This move should serve as a warning to the U.S., where AI-driven justice systems are beginning to resemble China’s infamous social credit system. The difference? In the U.S., instead of the state directly implementing these systems, they are privatized and wielded by law enforcement agencies, tech firms, and corporate security contractors. The consequences, however, are just as dystopian.

Restorative Justice and Community-Led Alternatives

As Canada continues to resist increasing economic and political annexation by the U.S., there is an opportunity to chart an alternative course in justice. AI-based policing and automated sentencing are not inevitable; they are policy choices that can be resisted and rejected.

Indigenous communities in Canada have long championed restorative justice—an approach that focuses on healing, reconciliation, and accountability rather than punitive measures. These models provide a compelling alternative to AI-driven punishment. Instead of using predictive analytics to surveil and control, communities can facilitate mediation, empower individuals, and strengthen social cohesion.

The U.S. experiment with automated justice is already proving disastrous, yet its architects remain committed to expanding its reach. Canada must learn from these failures and invest in systems that prioritize humanity over efficiency, equity over control, and justice over surveillance.

AI-based policing is not the future—it is a dangerous illusion that perpetuates systemic injustice while giving authoritarian actors new tools for control. As resistance grows, we must amplify the voices challenging these systems and push for alternatives that truly serve our communities. Automated justice is not justice at all, and the fight against it is one we cannot afford to lose.

Latest episode of Red-Tory is available. We get into some radical ideas about demilitarizing Canada!?

And for a laugh that’s not so funny yet also is:

Enable 3rd party cookies or use another browser